In today’s AI-augmented development landscape, hiring a talented developer is only step one.

The harder and more crucial challenge is making sure they stay sharp—ready to adapt to shifting technologies, changing architecture choices, and increasingly cross-functional expectations.

The future of software development isn’t just about coding anymore. It’s about learning quickly, collaborating well, navigating ambiguity, and architecting systems in a world where AI can write boilerplate in seconds but can’t replace sound engineering judgment.

And yet, the way we assess and grow technical talent is still stuck in old habits.

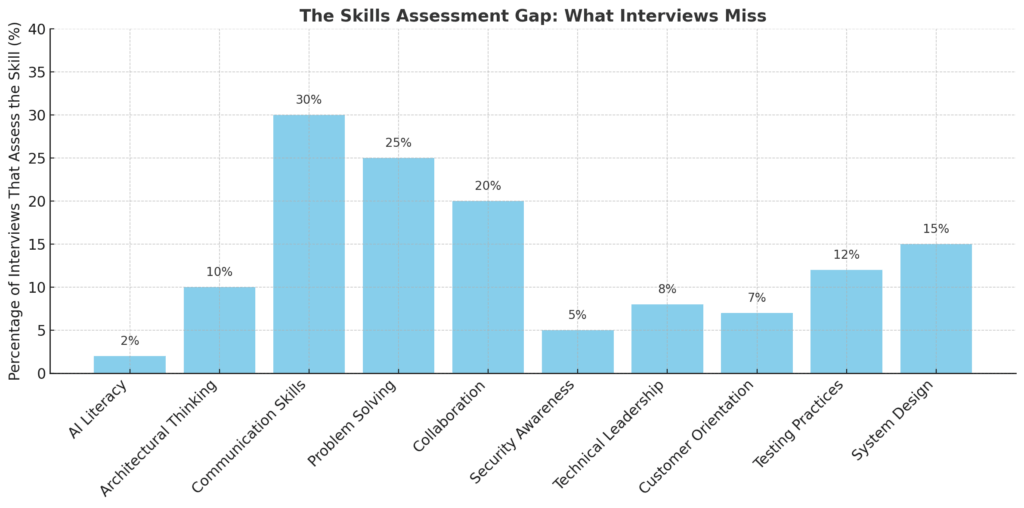

A recent Harvard Business Review article titled “Job Interviews Aren’t Evaluating the Right Skills” makes this disconnect painfully clear. While interviews are still the go-to method for assessing talent, they consistently fail to measure what companies say they care about most—especially when it comes to technical and applied skills.

Even structured interviews often repeat themselves instead of expanding into different skill areas.

And while AI is transforming software development, only 2.2% of interviews in 2025 included meaningful questions about AI. It’s no wonder that teams struggle to make confident, future-focused hiring and development decisions.

Interviews Still Miss the Mark—Even for Developers

Technical interviews were once meant to reveal how developers think. In theory, they simulate problem-solving in action.

But in practice, they often prioritize charisma, communication comfort, and time-pressured recall over the messy, collaborative work real engineering teams do every day.

Companies invest hours of developer time into live coding interviews, pulling engineers from product work and forcing candidates to solve artificial problems under a spotlight. The result? A process that’s expensive, inaccurate, and increasingly out of step with what matters.

The HBR article confirms this. It shows that across industries, companies believe they’re evaluating a wide set of skills—but actually, they’re not. Interviews overwhelmingly rely on candidates’ ability to talk about what they’d do, rather than show it.

And when it comes to technical roles, this mismatch leads to even higher hiring risk: developers who ace interviews may not thrive in real codebases, and those who stumble under pressure may never get a second chance.

In a world where AI changes what “good” looks like every month, companies need a different way to identify, support, and grow great engineers. The stakes aren’t just about hiring anymore.

They’re about keeping skills relevant, maintaining delivery speed, and avoiding the trap of slow technical debt accumulation from under-skilled teams.

The graph above is the visual representation of “The Skills Assessment Gap”. It highlights how infrequently key skills—especially the ones critical in modern software engineering roles—are actually evaluated during interviews.

Key Takeaways:

-

AI Literacy is only assessed in around 2% of interviews, despite being one of the fastest-growing skill requirements.

-

Critical capabilities like Architectural Thinking, Security Awareness, and System Design also see very low evaluation rates.

-

More traditional soft skills like Communication and Problem Solving are somewhat more covered, but still under-assessed relative to their importance.

Why Simulation-Based Assessment Is a Smarter Bet

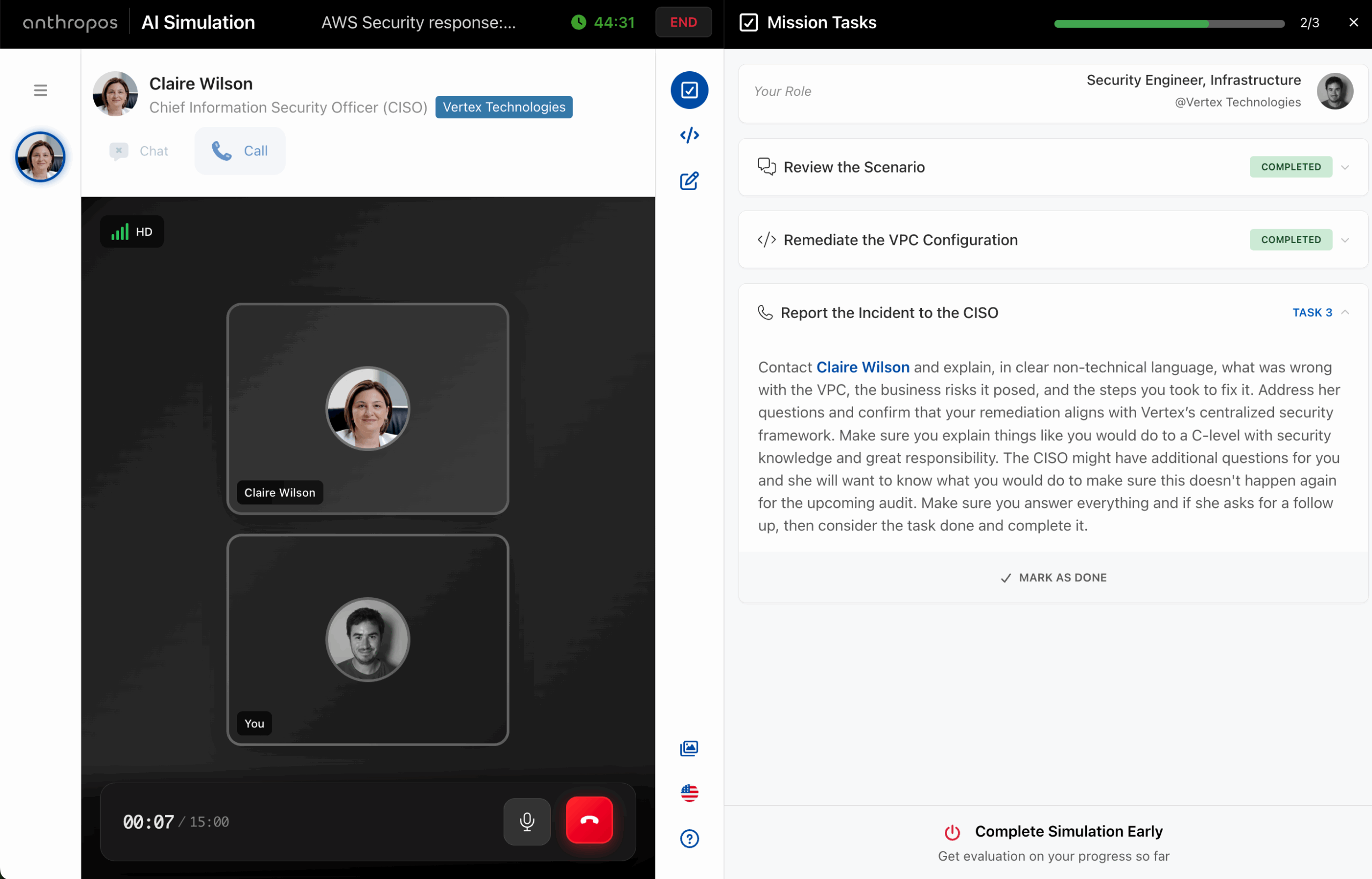

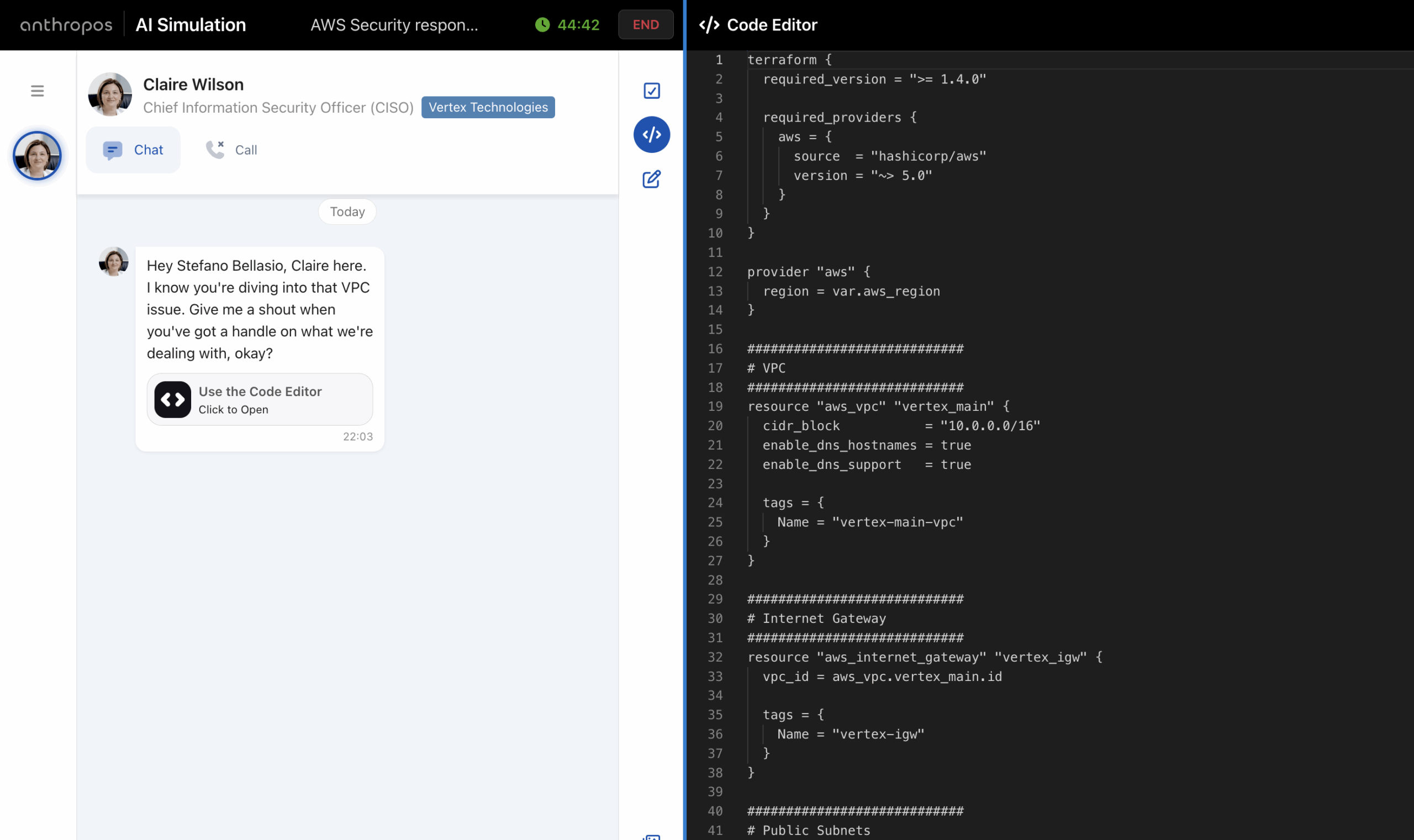

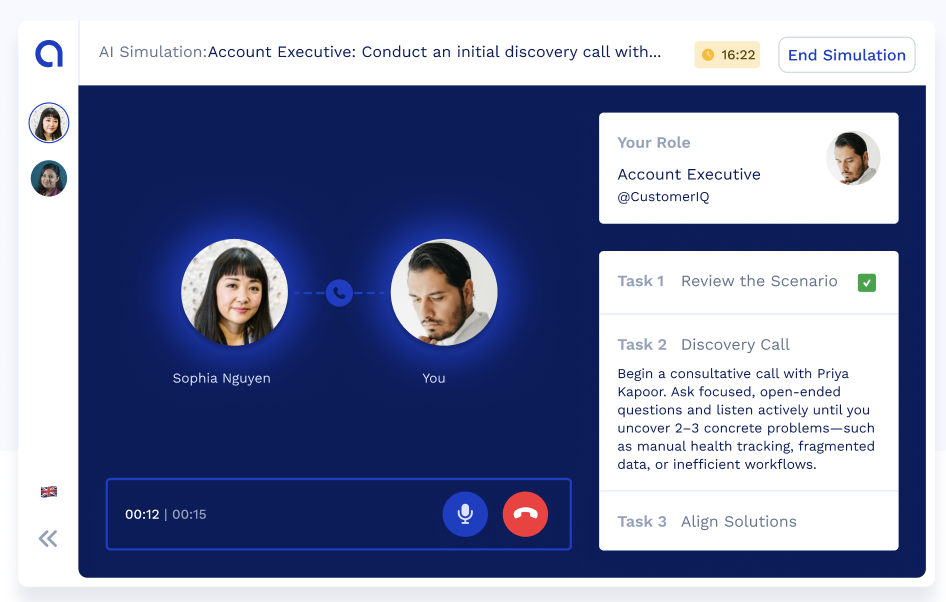

Anthropos introduces a fundamentally new approach to hiring and upskilling technical talent.

Instead of traditional tests or unstructured interviews, companies can use AI-powered simulations to place developers in real-world scenarios. These aren’t hypothetical questions.

They’re immersive experiences that reflect the actual challenges your engineers face: managing complexity, collaborating with stakeholders, and making smart decisions under constraints.

A simulation might ask a candidate or team member to redesign a service for scalability, troubleshoot a breaking change, or integrate a new API under time pressure. But it doesn’t stop at checking if the code runs.

The platform also looks at how the developer documents their decisions, communicates with simulated team members, adapts to change, and balances tradeoffs between time, cost, and code quality.

This method answers the biggest critique of modern interviews: the lack of meaningful insight into how someone actually performs. In a simulation, companies don’t just hear what a developer might do—they see it.

And when simulations are used beyond hiring, as part of an ongoing development framework, they offer even more value: real-time feedback, targeted growth, and a clear view of who’s ready for what’s next.

The Hidden Problem of Skills Decay

It’s tempting to think that once a developer is hired, the hardest part is over.

But the reality is that most teams fall behind not because they hire poorly—but because they fail to continuously invest in their people.

With AI reshaping every part of the stack, even strong developers can find themselves outdated if they’re not learning fast enough.

The World Economic Forum’s Future of Jobs Report projects that half the global workforce will need reskilling by 2025.

And software engineers are on the front line of this shift. New frameworks emerge. Old ones evolve. LLMs introduce new workflows. Security requirements tighten. And hybrid architectures require new mental models.

Traditional training, which often consists of courses or videos disconnected from day-to-day work, doesn’t cut it. Developers need active learning environments—contexts where they can try, fail, iterate, and improve.

This is where Anthropos simulations shine. They don’t just assess what someone knows; they develop it. They turn technical growth into a structured, measurable process that developers actually engage with.

It’s this shift—from content consumption to experiential learning—that closes the gap between hiring and long-term performance.

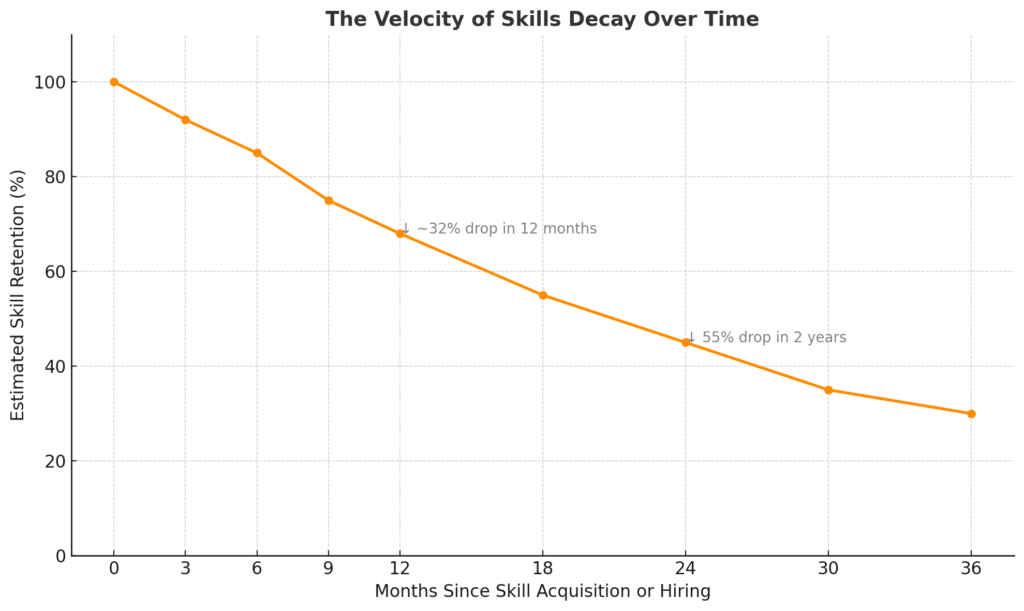

Here is a graph showing The Velocity of Skills Decay Over Time, based on aggregated insights from sources like the WEF Future of Jobs Report and LinkedIn Learning studies.

Key Insights:

-

Skills retention drops by approximately 32% within the first 12 months of acquisition if not reinforced.

-

Within 2 years, workers may lose over half of what they originally knew.

-

By year 3, retention can fall below 35%, especially in fast-evolving fields like tech and AI.

Real Work. Real Insight. Real Retention.

The truth is that the best developers don’t just want to be paid well.

They want to work in environments that challenge them, grow them, and recognize their strengths. When companies only focus on recruiting, they miss this. But when they extend the same care and strategy to retention and growth, they build teams that last.

Anthropos simulations are designed not only to identify skill gaps, but to close them.

Developers get feedback after each scenario, understand where they can improve, and see what excellence looks like in context. Engineering leaders get rich, behavioral data they can use to inform promotions, support performance reviews, and plan internal mobility.

And most importantly, candidates and employees feel respected. Because the simulations are tailored to their actual roles—not to some abstract metric of intelligence. A frontend developer won’t be tested on red-black trees.

They’ll be tested on how they architect components, handle state, and explain tradeoffs in a simulated design review. The process feels like work, because it is work—just safer, faster, and smarter.

It’s also worth noting that Anthropos is built to align with how companies are evolving their AI expectations. During simulations, the platform can track how developers use tools like ChatGPT or GitHub Copilot.

Instead of banning AI outright, it provides visibility: who’s using it, how they’re using it, and whether it helps or hinders the quality of the work. That’s real-world readiness. Not a constraint, but an insight.

Stop Guessing, Start Knowing

If interviews are broken—as the data clearly shows—and traditional assessments don’t reflect the real work, then companies need a better standard. Simulation-based assessment and upskilling offer that standard.

With Anthropos, hiring teams move from educated guesses to evidence. Engineering managers stop wasting senior dev time on screening, and start focusing on building. Candidates get to demonstrate what they’re truly capable of. And internal teams gain a continuous path to stay sharp, AI-literate, and collaborative.

The future of engineering doesn’t belong to those who hired well once. It belongs to those who keep hiring well, keep developing well, and keep growing.

That’s what simulations unlock—and it’s why the companies using them aren’t just hiring faster.

They’re building better teams.

September 8, 2025