The way companies evaluate and hire software developers is changing faster than ever before.

For years, technical hiring relied heavily on coding tests and algorithm challenges—often delivered through platforms like HackerRank or similar tools.

These assessments asked candidates to solve abstract problems under time pressure, with the goal of proving raw coding ability. While they served a purpose, they rarely reflected the complexity, collaboration, and communication required in real-world software development.

Many developers found these exercises frustrating. Some considered them overly simplistic and detached from actual work; others found them unnecessarily difficult and misaligned with what they would be doing on the job.

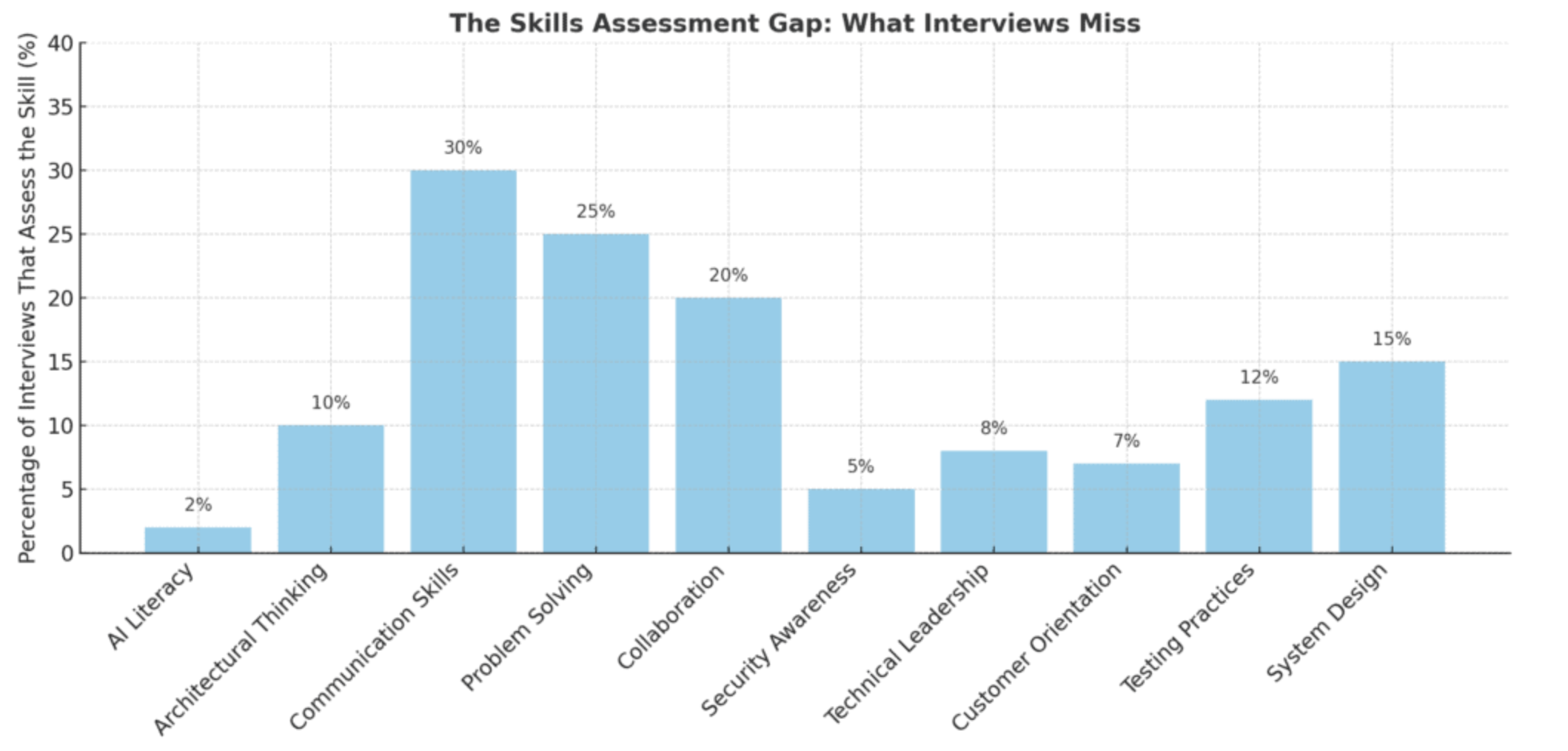

More importantly, these tests measured a narrow slice of technical skill—primarily the ability to produce code in isolation—while ignoring the broader capabilities that define success in modern engineering teams.

The shift underway today is not simply about replacing one type of technical challenge with another. It’s about redefining what “developer readiness” means in an era where AI is reshaping the way code is written, reviewed, and deployed.

The AI Disruption in Technical Hiring

Two major forces are transforming the hiring landscape for software developers. The first is the widespread availability of large language models such as ChatGPT, Claude, and others, which candidates can—and often do—use during the hiring process.

This makes it difficult to know whether a candidate’s solution to a coding problem truly reflects their individual capability or the skill of prompting an AI assistant.

The second, and more profound, change is that AI is fundamentally altering the nature of the job itself. In an AI-augmented environment, raw coding speed is less of a differentiator.

What becomes more valuable is the ability to evaluate AI-generated code, make sound architectural choices, collaborate effectively with cross-functional teams, and adapt to changing requirements while maintaining a focus on business objectives.

Developers who can navigate this new reality need more than technical competence. They must be able to communicate clearly with non-technical stakeholders, manage competing priorities, and work in distributed, highly collaborative environments.

These are skills that traditional coding tests cannot capture—and without assessing them, companies run the risk of hiring people who can pass a test but struggle in the role.

Why Simulation-Based Assessment Changes the Game

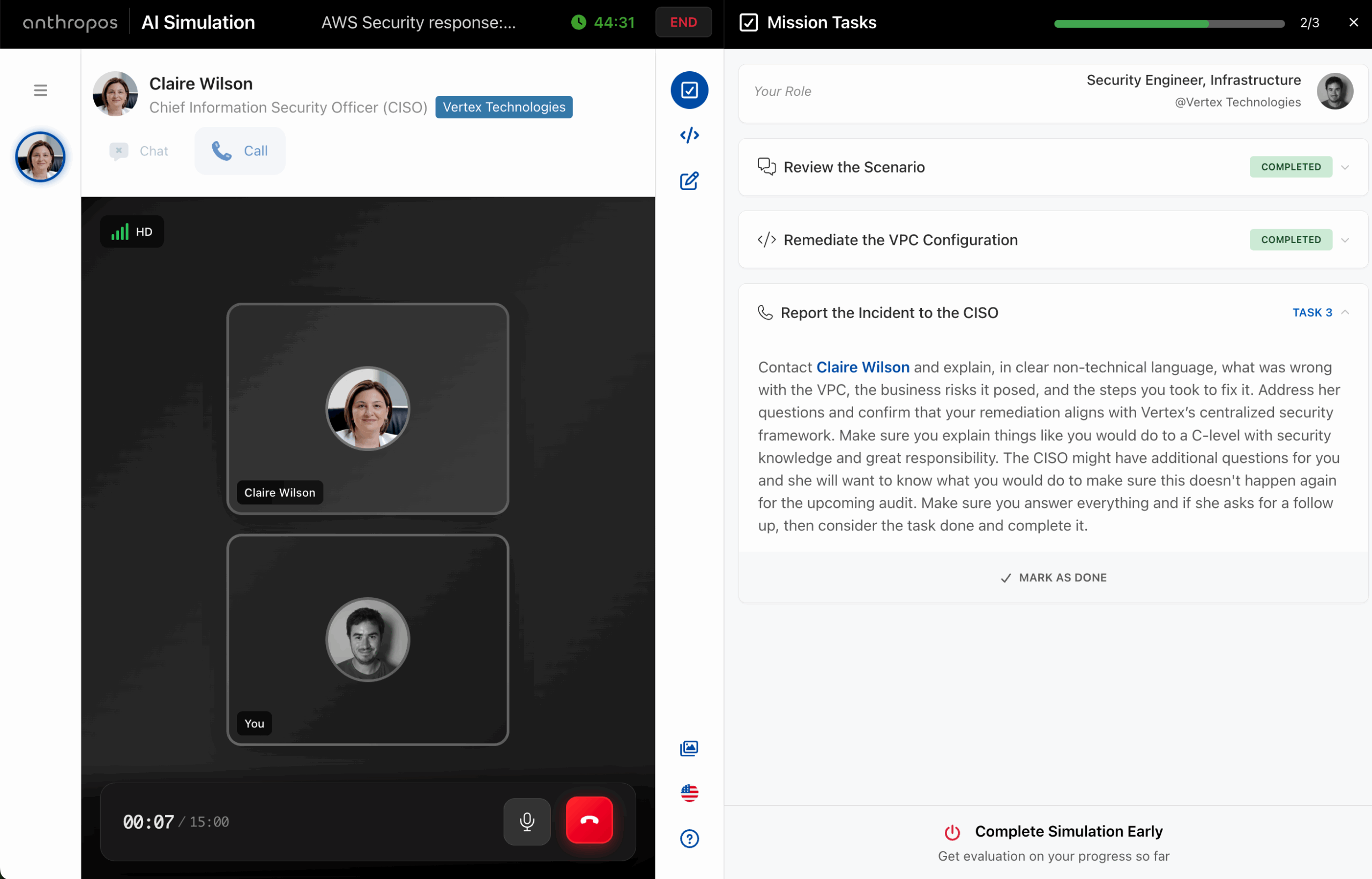

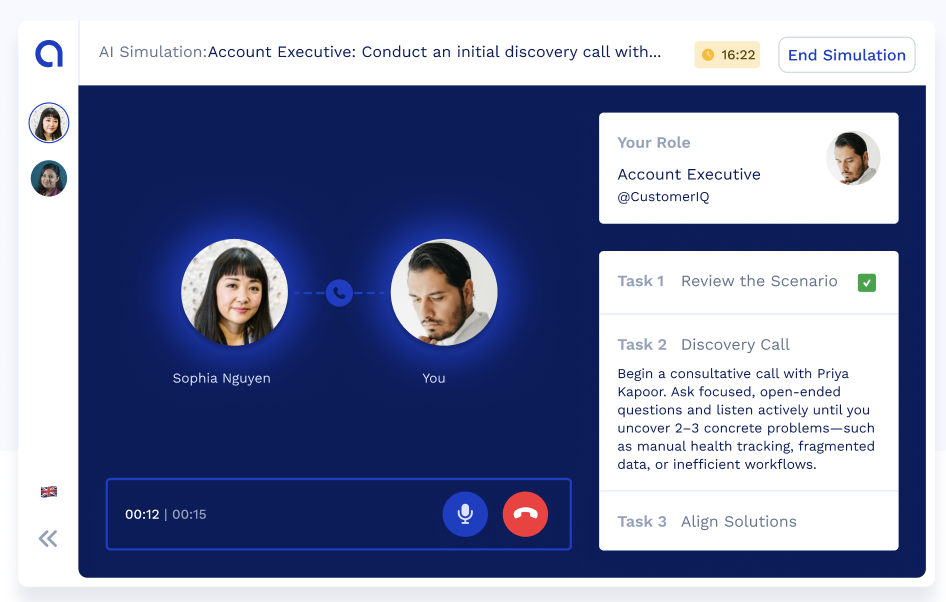

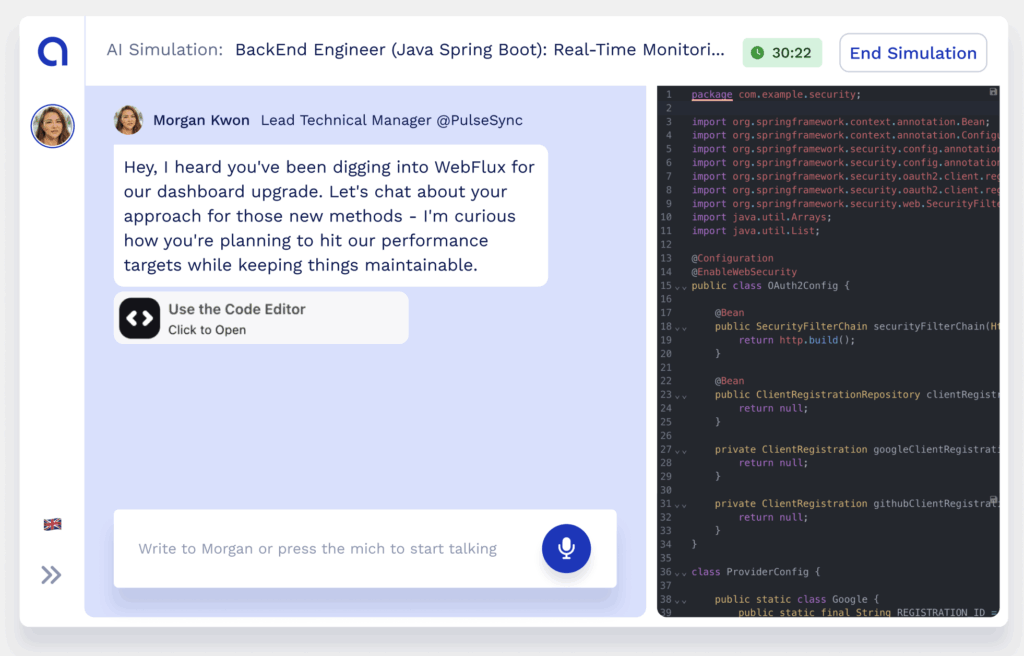

Anthropos introduces a different approach to technical hiring, one built on AI-powered simulations that mirror the realities of modern software development. Rather than asking candidates to solve abstract algorithm puzzles, Anthropos immerses them in realistic scenarios that blend technical problem-solving with communication, collaboration, and decision-making.

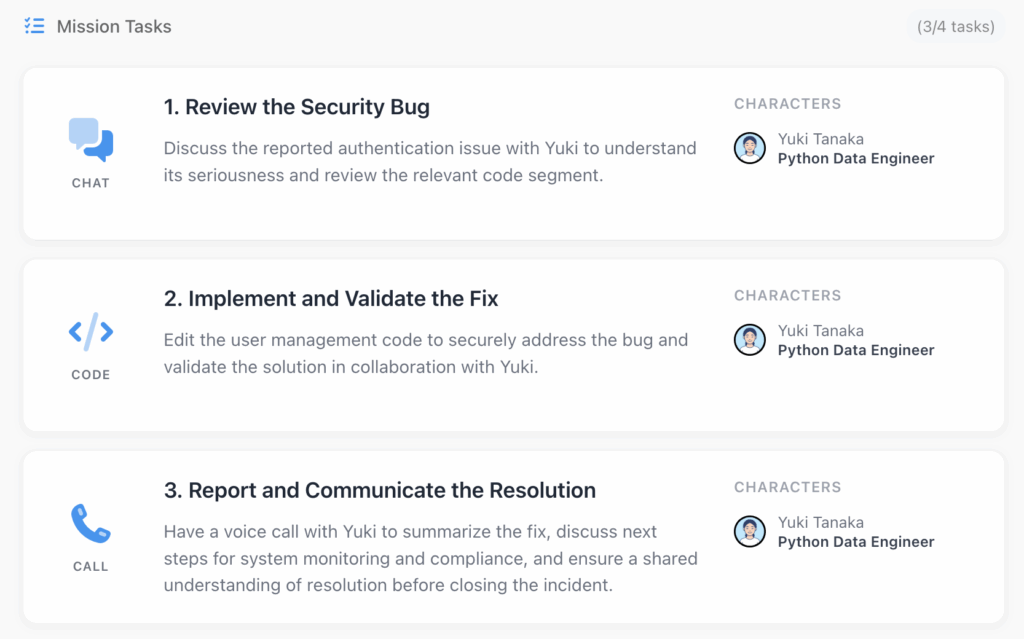

A simulation might ask a candidate to design and implement a microservice for a growing e-commerce platform. On the surface, it’s a coding task, but the simulation goes further.

It observes how the candidate gathers requirements, how they explain their technical choices, how they adapt when new constraints are introduced, and how they collaborate with simulated teammates. The result is a comprehensive view of both hard and soft skills—how they code, but also how they think, interact, and problem-solve in the context of a team and an evolving project.

This is not just a test; it’s a reality check. It shows hiring managers exactly how someone might perform in the role, under realistic pressures and conditions.

Assessing Developers Skills: Measuring More Than Code

One of Anthropos’s strengths is its ability to assess a candidate across multiple dimensions of performance.

Technical proficiency is evaluated in ways that reflect real work—integrating APIs, refactoring legacy code, or debugging production issues—rather than artificial puzzles. Communication is measured through documentation, commit messages, and the clarity of technical explanations.

Problem-solving is evaluated by observing how candidates break down complex challenges, research new concepts, and adapt when an approach fails. Collaboration is assessed through simulated team interactions, code reviews, and the candidate’s responsiveness to feedback.

A unique advantage of Anthropos is its transparency around AI usage. Instead of attempting to block tools like ChatGPT, the platform detects and records how candidates interact with them—what they use AI for, how they adapt AI-generated suggestions, and whether their usage aligns with the company’s culture. This transforms AI use from a source of uncertainty into a valuable data point for hiring decisions.

Customization for Real-World Fit

Unlike one-size-fits-all coding tests, Anthropos simulations can be tailored to a company’s exact tech stack, processes, and work culture.

A Node.js-based SaaS team can assess candidates directly in Node.js. An enterprise using Spring Boot can see how a developer applies that framework’s conventions. Architectural patterns, internal communication standards, and even code review processes can be embedded into the simulation.

This ensures that the assessment not only measures general technical ability but also reveals how a candidate would operate within the company’s specific environment. For many organizations, this customization is key to predicting whether a new hire will integrate smoothly into existing teams and workflows.

There’s also a significant shift in the candidate experience. Traditional coding tests can feel like a black hole—hours spent on a problem with no feedback and no sense of whether the work reflected the actual role. Anthropos reverses this dynamic by providing immediate, actionable feedback during simulations. Candidates leave the process having learned something useful, regardless of the outcome, and with a clearer understanding of the company and role.

This approach not only improves the employer brand but also helps attract top talent. Skilled developers are more likely to engage with a process that respects their time, mirrors real work, and offers value in return.

The Future of Developer Hiring: How to Assess Developers Skills

As AI becomes a standard part of the developer’s toolkit, companies that cling to outdated assessment methods will find themselves at a disadvantage. Coding ability alone is no longer enough to determine whether a developer can thrive in a modern, AI-augmented engineering team.

Anthropos offers a path forward—one that evaluates developers holistically, in scenarios that reflect the complexity and demands of real-world software development. It delivers richer insights, reduces the risk of mis-hire, and aligns hiring with the skills that actually drive success in today’s environment.

The shift from coding tests to simulation-based assessment isn’t just an incremental improvement; it’s a fundamental change in how we understand and measure developer readiness. In doing so, it helps companies build stronger teams, make better hiring decisions, and prepare for the realities of software development in the AI era.

August 25, 2025